Descriptive Statistics

Descriptive Statistics is the part of Statistics in charge of representing, analysing and summarizing the information contained in the sample.

After the sampling process, this is the next step in every statistical study and usually consists of:

-

To classify, group and sort the data of the sample.

-

To tabulate and plot data according to their frequencies.

-

To calculate numerical measures that summarize the information contained in the sample (sample statistics).

It has no inferential power, so do not generalize to the population from the measures computed by Descriptive Statistics!.

Frequency distribution

The study of a statistical variable starts by measuring the variable in the individuals of the sample and classifying the values.

There are two ways of classifying data:

-

Non-grouping: Sorting values from lowest to highest value (if there is an order). Used with qualitative variables and discrete variables with few distinct values.

-

Grouping: Grouping values into intervals (classes) and sort them from lowest to highest intervals. Used with continuous variables and discrete variables with many distinct values.

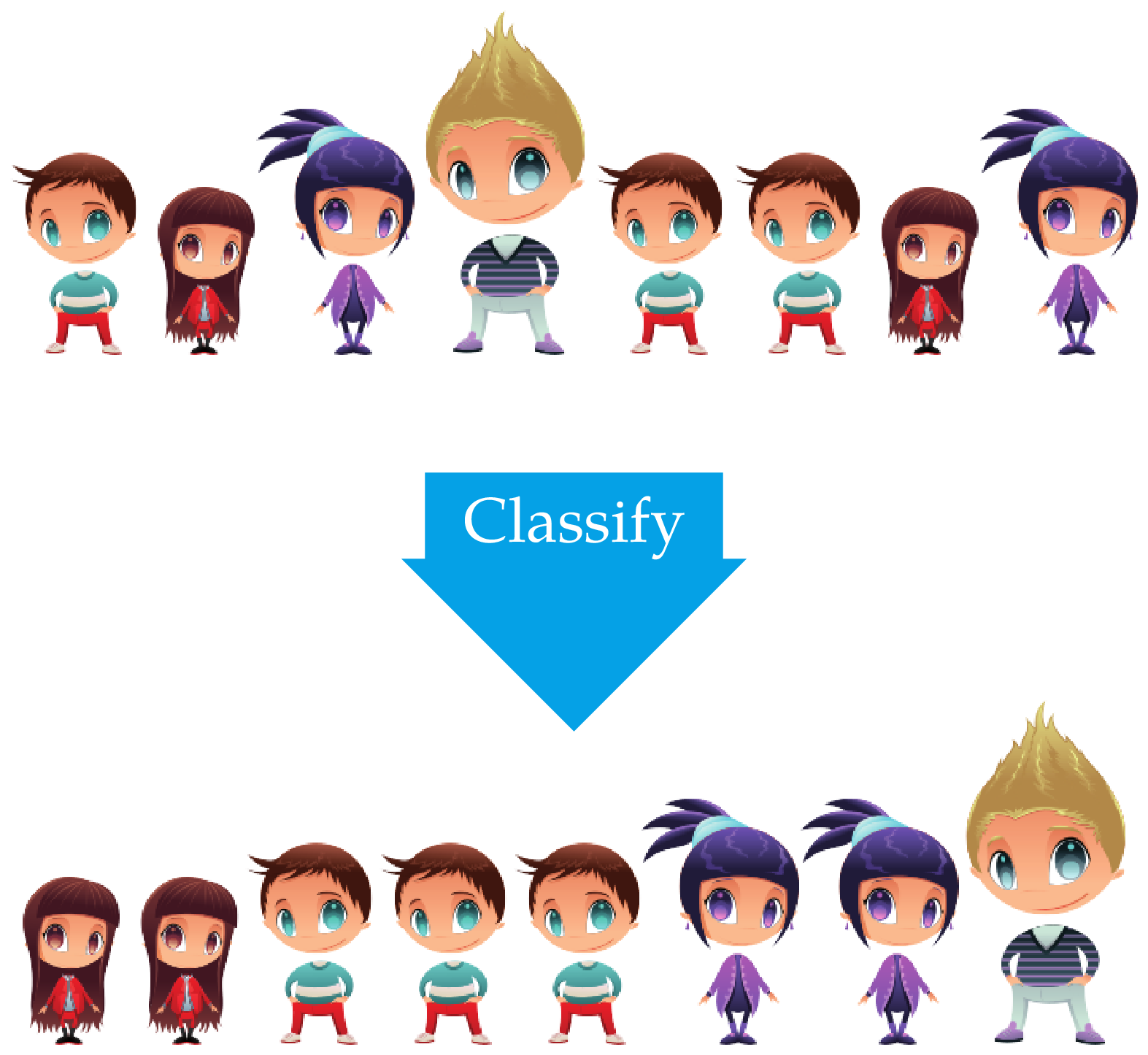

Sample classification

It consists in grouping the values that are the same and sorting them if there is an order among them.

Example.

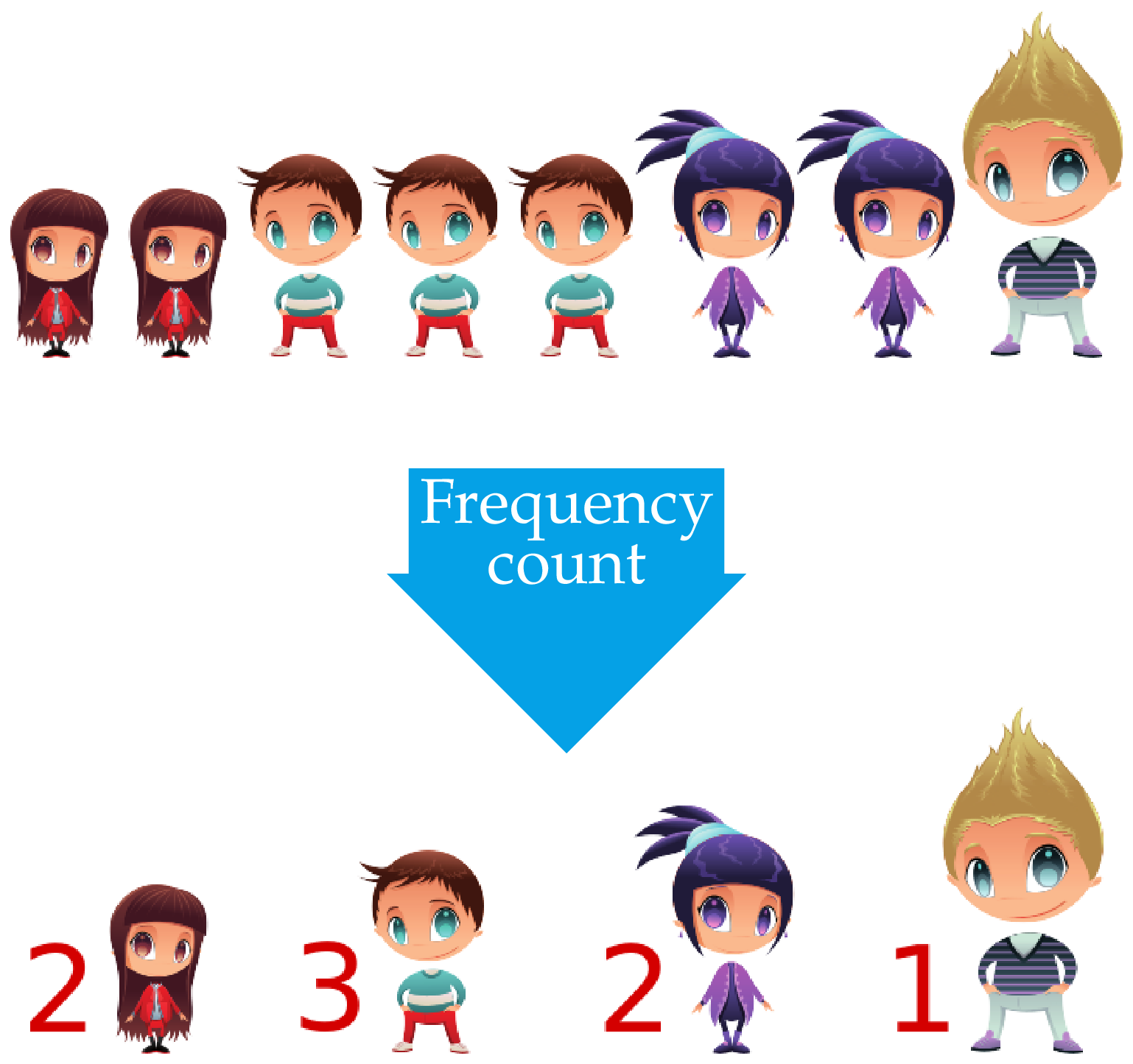

Frequency count

It consists in counting the number of times that every value appears in the sample.

Example.

Sample frequencies

Definition - Sample frequencies. Given a sample of

-

Absolute Frequency

-

Relative Frequency

- Cumulative Absolute Frequency

- Cumulative Relative Frequency

Frequency table

The set of values of a variable with their respective frequencies is called frequency distribution of the variable in the sample, and it is usually represented as a frequency table.

| Absolute frequency | Relative frequency | Cumulative absolute frequency | Cumulative relative frequency | |

|---|---|---|---|---|

Example - Quantitative variable and non-grouped data. The number of children in 25 families are:

The frequency table for the number of children in this sample is

Example - Quantitative variable and grouped data. The heights (in cm) of 30 students are:

162, 187, 198, 177, 178, 165, 154, 188, 166, 171,

175, 182, 167, 169, 172, 186, 172, 176, 168, 187.

The frequency table for the height in this sample is

Classes construction

Intervals are known as classes and the center of intervals as class marks.

When grouping data into intervals, the following rules must be taken into account:

- The number of intervals should not be too big nor too small.

A usual rule of thumb is to take a number of intervals approximately

- The intervals must not overlap and must cover the entire range of values. It does not matter if intervals are left-open and right-closed or vice versa.

- The minimum value must fall in the first interval and the maximum value in the last.

Example - Qualitative variable. The blood types of 30 people are:

The frequency table of the blood type in this sample is

Observe that in this case cumulative frequencies are nonsense as there is no order in the variable.

Frequency distribution graphs

Usually the frequency distribution is also displayed graphically. Depending on the type of variable and whether data has been grouped or not, there are different types of charts:

-

Bar chart

-

Histogram

-

Line or polygon chart.

-

Pie chart

Bar chart

A bar chart consists of a set of bars, one for every value or category of the variable, plotted on a coordinate system.

Usually the values or categories of the variable are represented on the

Depending on the type of frequency represented in the

Sometimes a polygon, known as frequency polygon, is plotted joining the top of every bar with straight lines.

Example. The bar chart below shows the absolute frequency distribution of the number of children in the previous sample.

The bar chart below shows the relative frequency distribution of the number of children with the frequency polygon.

The bar chart below shows the cumulative absolute frequency distribution of the number of children.

And the bar chart below shows the cumulative relative frequency distribution of the number of children with the frequency polygon.

Histogram

A histogram is similar to a bar chart but for grouped data.

Usually the classes or grouping intervals are represented on the

Depending on the type of frequency represented in the

As with the bar chart, the frequency polygon can be drawn joining the top centre of every bar with straight lines.

Example. The histogram below shows the absolute frequency distribution of heights.

The histogram below shows the relative frequency distribution of heights with the frequency polygon.

The cumulative frequency polygon (for absolute or relative frequencies) is known as ogive.

Example. The histogram and the ogive below show the cumulative relative distribution of heights.

Observe that in the ogive we join the top right corner of bars with straight lines, instead of the top center, because we do not reach the accumulated frequency of the class until the end of the interval.

Pie chart

A pie chart consists of a circle divided in slices, one for every value or category of the variable. Each slice is called a sector and its angle or area is proportional to the frequency of the corresponding value or category.

Pie charts can represent absolute or relative frequencies, but not cumulative frequencies, and are used with nominal qualitative variables. For ordinal qualitative or quantitative variables is better to use bar charts, because it is easier to perceive differences in one dimension (length of bars) than in two dimensions (areas of sectors).

Example. The pie chart below shows the relative frequency distribution of blood types.

The normal distribution

Distributions with different properties will show different shapes.

Outliers

One of the main problems in samples are outliers, values very different from the rest of values of the sample.

Example. The last height of the following sample of heights is an outlier.

It is important to find out outliers before doing any analysis, because outliers usually distort the results.

They always appears in the ends of the distribution, and can be found out easily with a box and whiskers chart (as be show later).

Outliers management

With big samples outliers have less importance and can be left in the sample.

With small samples we have several options:

- Remove the outlier if it is an error.

- Replace the outlier by the lower or higher value in the distribution that is not an outlier if it is not an error and the outlier does not fit the theoretical distribution.

- Leave the outlier if it is not an error, and change the theoretical model to fit it to outliers.

Sample statistics

The frequency table and charts summarize and give an overview of the distribution of values of the studied variable in the sample, but it is difficult to describe some aspects of the distribution from it, as for example, which are the most representative values of the distribution, how is the spread of data, which data could be considered outliers, or how is the symmetry of the distribution.

To describe those aspects of the sample distribution more specific numerical measures, called sample statistics, are used.

According to the aspect of the distribution that they study, there are different types of statistics:

Measures of locations: They measure the values where data are concentrated or that divide the distribution into equal parts.

Measures of dispersion: They measure the spread of data.

Measures of shape: They measure aspects related to the shape of the distribution , as the symmetry and the concentration of data around the mean.

Location statistics

There are two groups:

Central location measures: They measure the values where data are concentrated, usually at the centre of the distribution. These values are the values that best represents the sample data. The most important are:

- Arithmetic mean

- Median

- Mode

Non-central location measures: They divide the sample data into equals parts. The most important are:

- Quartiles.

- Deciles.

- Percentiles.

Arithmetic mean

Definition - Sample arithmetic mean

It can be calculated from the frequency table with the formula

In most cases the arithmetic mean is the value that best represent the observed values in the sample.

Watch out! It can not be calculated with qualitative variables.

Example - Non-grouped data. Using the data of the sample with the number of children of families, the arithmetic mean is

or using the frequency table

That means that the value that best represent the number of children in the families of the sample is 1.76 children.

Example - Grouped data. Using the data of the sample of student heights, the arithmetic mean is

or using the frequency table and taking the class marks as

Observe that when the mean is calculated from the table the result differs a little from the real value, because the values used in the calculations are the class marks instead of the actual values.

Weighted mean

In some cases the values of the sample have different importance. In that case the importance or weight of each value of the sample must be taken into account when calculating the mean.

Definition - Sample weighted mean

From the frequency table can be calculated with the formula

Example. Assume that a student wants to calculate a representative measure of his/her performance in a course. The grade and the credits of every subjects are

| Subject | Credits | Grade |

|---|---|---|

| Maths | 6 | 5 |

| Economics | 4 | 3 |

| Chemistry | 8 | 6 |

The arithmetic mean is

However, this measure does not represent well the performance of the student, as not all the subjects have the same importance and require the same effort to pass. Subjects with more credits require more work and must have more weight in the calculation of the mean.

In this case it is better to use the weighted mean, using the credits as the weights of grades, as a representative measure of the student effort

Median

The median divides the sample distribution into two equal parts, that is, there are the same number of values above and below the median. Therefore, it has cumulative frequencies

Watch out! It can not be calculated for nominal variables.

With non-grouped data, there are two possibilities:

- Odd sample size: The median is the value in the position

- Even sample size: The median is the average of values in positions

Example. Using the data of the sample with the number of children of families, the sample size is 25, that is odd, and the median is the value in the

position

And the median is 2 children.

With the frequency table, the median is the lowest value with a cumulative absolute frequency greater than or equal to

Median calculation for grouped-data

For grouped data the median is calculated from the ogive, interpolating in the class with cumulative relative frequency 0.5.

Both expressions are equal as the angle

Example - Grouped data. Using the data of the sample of student heights, the median falls in class (170,180].

And interpolating in interval (170,180] we get

Equating both expressions and solving the equation, we get

This means that half of the students in the sample have an height lower than or equal to 174.54 cm and the other half greater than or equal to.

Mode

With grouped data the modal class is the class with the highest frequency.

It can be calculated for all types of variables (qualitative and quantitative).

Distributions can have more than one mode.

Example. Using the data of the sample with the number of children of families, the value with the highest frequency is

Using the data of the sample of student heights, the class with the highest frequency is

Which central tendency statistic should I use?

In general, when all the central tendency statistics can be calculated, is advisable to use them as representative values in the following order:

-

The mean. Mean takes more information from the sample than the others, as it takes into account the magnitude of data.

-

The median. Median takes less information than mean but more than mode, as it takes into account the order of data.

-

The mode. Mode is the measure that fewer information takes from the sample, as it only takes into account the absolute frequency of values.

But, be careful with outliers, as the mean can be distorted by them. In that case it is better to use the median as the value most representative.

Example. If a sample of number of children of 7 families is

then,

Which measure represent better the number of children in the sample?

Non-central location measures

The non-central location measures or quantiles divide the sample distribution in equal parts.

The most used are:

Quartiles: Divide the distribution into 4 equal parts. There are 3 quartiles:

Deciles: Divide the distribution into 10 equal parts. There are 9 deciles:

Percentiles: Divide the distribution into en 100 equal parts. There are 99 percentiles:

Observe that there is a correspondence between quartiles, deciles and percentiles. For example, first quartile coincides with percentile 25, and fourth decile coincides with the percentile 40.

Quantiles are calculated in a similar way to the median. The only difference lies in the cumulative relative frequency that correspond to every quantile.

Example. Using the data of the sample with the number of children of families, the cumulative relative frequencies were

Dispersion statistics

Dispersion or spread refers to the variability of data. So, dispersion statistics measure how the data values are scattered in general, or with respect to a central location measure.

For quantitative variables, the most important are:

- Range

- Interquartile range

- Variance

- Standard deviation

- Coefficient of variation

Range

Definition - Sample range. The sample range of a variable

The range measures the largest variation among the sample data. However, it is very sensitive to outliers, as they appear at the ends of the distribution, and for that reason is rarely used.

Interquartile range

The following measure avoids the problem of outliers and is much more used.

Definition - Sample interquartile range. The sample interquartile range of a variable

The interquartile range measures the spread of the 50% central data.

Box plot

The dispersion of a variable in a sample can be graphically represented with a box plot, that represent five descriptive statistics (minimum, quartiles and maximum) known as the five-numbers. It consist in a box, drawn from the lower to the upper quartile, that represent the interquartile range, and two segments, known as the lower and the upper whiskers. Usually the box is split in two with the median.

This chart is very helpful as it serves to many purposes:

- It serves to measure the spread of data as it represents the range and the interquartile range.

- It serves to detect outliers, that are the values outside the interval defined by the whiskers.

- It serves to measure the symmetry of distribution, comparing the length of the boxes and whiskers above and below the median.

Example. The chart below shows a box plot of newborn weights.

To create a box plot follow the steps below:

-

Calculate the quartiles.

-

Draw a box from the lower to the upper quartile.

-

Split the box with the median or second quartile.

-

For the whiskers calculate first two values called fences

The fences define the interval where data are considered normal. Any value outside that interval is considered an outlier.

For the lower whisker draw a segment from the lower quartile to the lower value in the sample grater than or equal to

- Finally, if there are outliers, draw a dot at every outlier.

Example. The box plot for the sample with the number of children si shown below.

Deviations from the mean

Another way of measuring spread of data is with respect to a central tendency measure, as for example the mean.

In that case, it is measured the distance from every value in the sample to the mean, that is called deviation from the mean·

If deviations are big, the mean is less representative than when they are small.

Example. The grades of 3 students in a course with subjects

All the students have the same mean, but, in which case does the mean represent better the course performance?

Variance and standard deviation

Definition – Sample variance

It can also be calculated with the formula

The variance has the units of the variable squared, and to ease its interpretation it is common to calculate its square root.

Definition - Sample standard deviation

Both variance and standard deviation measure the spread of data around the mean. When the variance or the standard deviation are small, the sample data are concentrated around the mean, and the mean is a good representative measure. In contrast, when variance or the standard deviation are high, the sample data are far from the mean, and the mean does not represent so well.

| Standard deviation small | Mean is representative | |

| Standard deviation big | Mean is unrepresentative |

Example. The following samples contains the grades of 2 students in 2 subjects

Which mean is more representative?

Example - Non-grouped data. Using the data of the sample with the number of children of families, with mean

and the standard deviation is

Compared to the range, that is 4 children, the standard deviation is not very large, so we can conclude that the dispersion of the distribution is small and consequently the mean,

Example - Grouped data. Using the data of the sample with the heights of students and grouping heights in classes, we got a mean

and the standard deviation is

This value is quite small compared to the range of the variable, that goes from 150 to 200 cm, therefore the distribution of heights has little dispersion and the mean is very representative.

Coefficient of variation

Both, variance and standard deviation, have units and that makes difficult to interpret them, specially when comparing distributions of variables with different units.

For that reason it is also common to use the following dispersion measure that has no units.

Definition - Sample coefficient of variation

The coefficient of variation measures the relative dispersion of data around the sample mean.

As it has no units, it is easier to interpret: The higher the coefficient of variation is, the higher the relative dispersion with respect to the mean and the less representative the mean is.

The coefficient of variation it is very helpful to compare dispersion in distributions of different variables, even if variables have different units.

Example. In the sample of the number of children, where the mean was

In the sample of heights, where the mean was

This means that the relative dispersion in the heights distribution is lower than in the number of children distribution, and consequently the mean of height is most representative than the mean of number of children.

Shape statistics

They are measures that describe the shape of the distribution.

In particular, the most important aspects are:

Symmetry It measures the symmetry of the distribution with respect to the mean. The statistics most used is the coefficient of skewness.

Kurtosis: It measures the concentration of data around the mean of the distribution. The statistics most used is the coefficient of kurtosis.

Coefficient of skewness

Definition - Sample coefficient of skewness

The coefficient of skewness measures the symmetric or skewness of the distribution, that is, how many values in the sample are above or below the mean and how far from it.

Example - Grouped data. Using the frequency table of the sample with the heights of students and adding a new column with the deviations from the mean

As it is close to 0, that means that the distribution of heights is fairly symmetrical.

Coefficient of kurtosis

Definition - Sample coefficient of kurtosis

The coefficient of kurtosis measures the concentration of data around the mean and the length of tails of distribution. The normal (Gaussian bell-shaped) distribution is taken as a reference.

Example - Grouped data. Using the frequency table of the sample with the heights of students and adding a new column with the deviations from the mean

As it is a negative value but not too far from 0, that means that the distribution of heights is a little bit platykurtic.

As we will see in the chapters of inferential statistics, many of the statistical test can only be applied to normal (bell-shaped) populations.

Normal distributions are symmetrical and mesokurtic, and therefore, their coefficients of symmetry and kurtosis are equal to 0. So, a way of checking if a sample comes from a normal population is looking how far are the coefficients of skewness and kurtosis from 0.

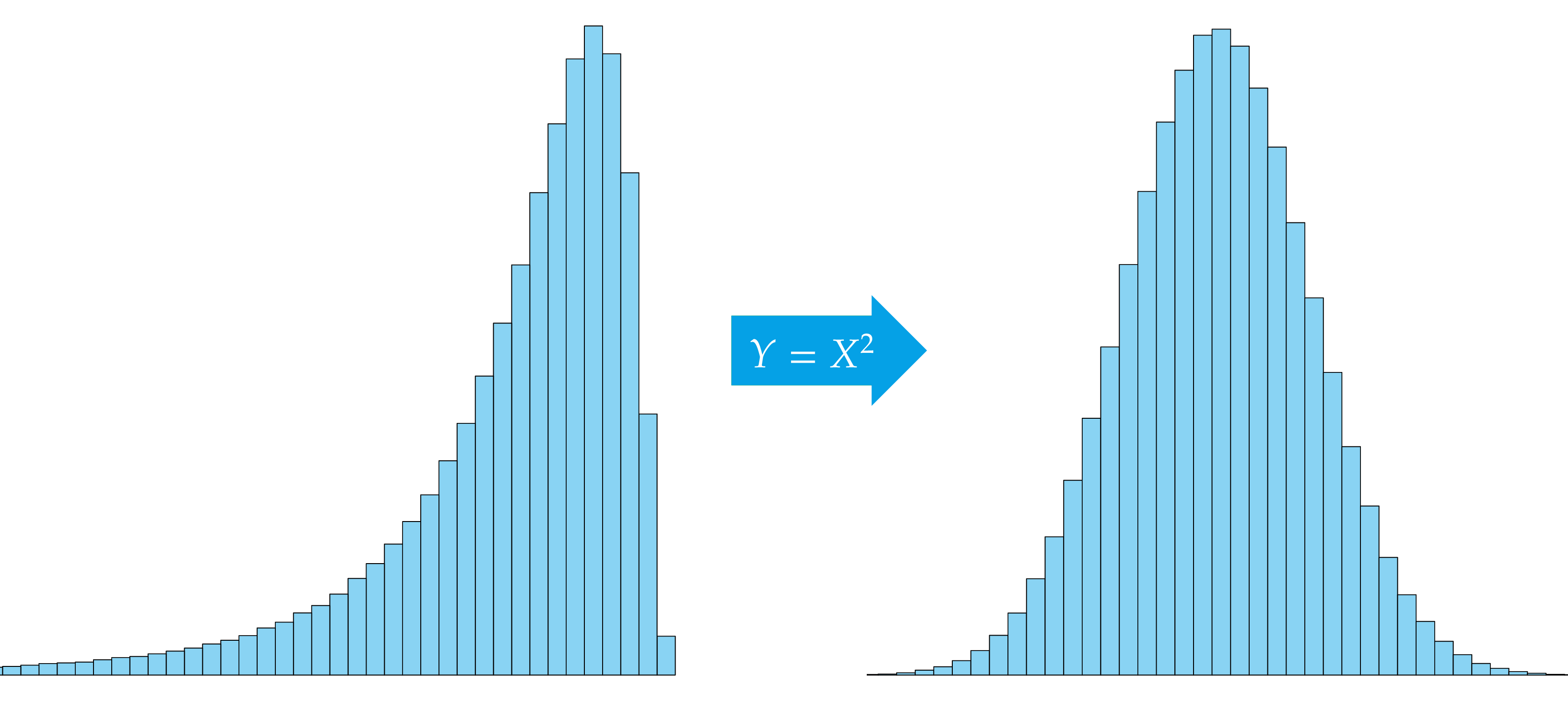

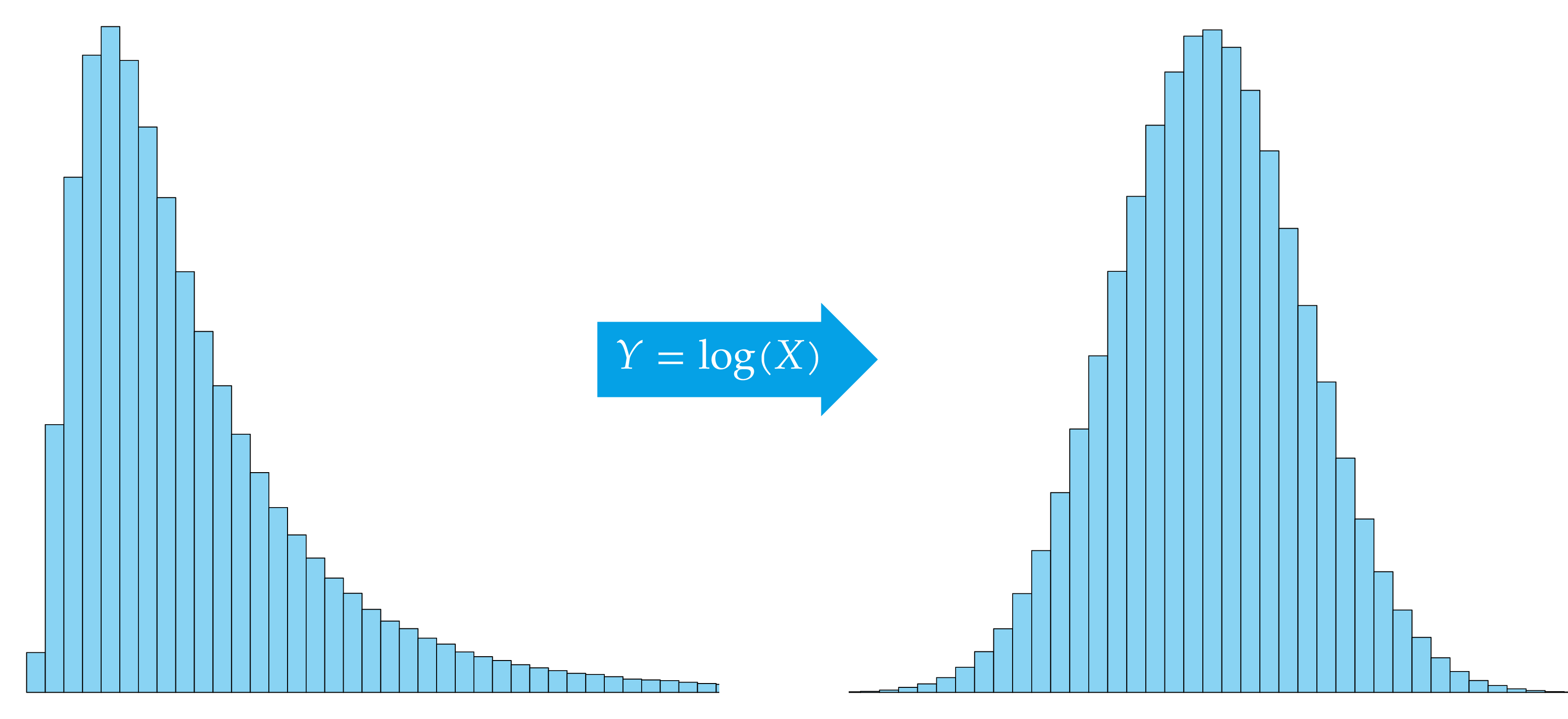

In that case, is common to apply a transformation to the variable to correct non-normality.

Non-normal distributions

Non-normal right-skewed distribution

An example of left-skewed distribution is the household income.

Non-normal left-skewed distribution

An example of left-skewed distribution is the age at death.

distribution

distribution

Non-normal bimodal distribution

An example of left-skewed distribution is the age at death.

Variable transformations

In many cases, the raw sample data are transformed to correct non-normality of distribution or just to get a more appropriate scale.

For example, if we are working with heights in metres and a sample contains the following values:

it is possible to avoid decimals multiplying by 100, that is, changing from metres to centimetres:

And it is also possible to reduce the magnitude of data subtracting the minimum value in the sample, in this case 165 cm:

It is obvious that these data are easier to work with than the original ones. In essences, what it is been done is to apply the following transformation to the data:

Linear transformations

One of the most common transformations is the linear transformation:

For a linear transformation, the mean and the standard deviation of the transformed variable are

Additionally, the coefficient of kurtosis does not change and the coefficient of skewness changes only the sign if

Standardization and standard scores

One of the most common linear transformations is the standardization.

Definition - Standardized variable and standard scores. The standardized variable of a variable

For each value

The standardized variable always has mean 0 and standard deviation 1.

Example. The grades of 5 students in 2 subjects are

Did the fourth student get the same performance in subject

It might seem that both students had the same performance in every subject because they have the same grade, but in order to get the performance of every student relative to the group of students, the dispersion of grades in every subject must be considered. For that reason it is better to use the standard score as a measure of relative performance.

That is, the student with an 8 in

Following with this example and considering both subjects, which is the best student?

If we only consider the sum of grades

the best student is the second one.

But if the relative performance is considered, taking the standard scores

the best student is the fourth one.

Non-linear transformations

Non-linear transformations are also common to correct non-normality of distributions.

The square transformation

The square root transformation

Factors

Sometimes it is interesting to describe the frequency distribution of the main variable for different subsamples corresponding to the categories of another variable known as classificatory variable or factor.

Example. Dividing the sample of heights by gender we get two subsamples

Comparing distributions for the levels of a factor

Usually factors allow to compare the distribution of the main variable for every category of the factor.

Example. The following charts allow to compare the distribution of heights according to the gender.