Vector functions of a single real variable

Definition - Vector function of a single real variable. A vector function of a single real variable or vector field of a scalar variable is a function that maps every scalar value into a vector in :

where , , are real function of a single real variable known as coordinate functions.

The most common vector field of scalar variable are in the the real plane , where usually they are represented as

and in the real space , where usually they are represented as

Graphic representation of vector fields

The graphic representation of a vector field in is a trajectory in the real plane.

The graphic representation of a vector field in is a trajectory in the real space.

Derivative of a vector field

The concept of derivative as the limit of the average rate of change of a function can be extended easily to vector fields.

Definition - Derivative of a vectorial field. A vectorial field is differentiable at a point if the limit

exists. In such a case, the value of the limit is known as the derivative of the vector field at , and it is written .

Many properties of real functions of a single real variable can be extended to vector fields through its component functions. Thus, for instance, the derivative of a vector field can be computed from the derivatives of its component functions.

Theorem. Given a vector field , if is differentiable at for all , then is differentiable at and its derivative is

Proof

The proof for a vectorial field in is easy.

Kinematics: Curvilinear motion

The notion of derivative as a velocity along a trajectory in the real line can be generalized to a trajectory in any euclidean space .

In case of a two dimensional space , if describes the position of a moving object in the real plane at any time , taking as reference the coordinates origin and the unitary vectors , we can represent the position of the moving object at every moment with a vector , where the coordinates

are the coordinate functions of .

In this context the derivative of a trajectory is the velocity vector of the trajectory at moment .

Example. Given the trajectory , , whose image is the unit circumference centred in the coordinate origin, its coordinate functions are , , , and its velocity is

In the moment , the object is in position and it is moving with a velocity .

Observe that the module of the velocity vector is always 1 as .

Tangent line to a trajectory

Tangent line to a trajectory in the plane

Vectorial equation

Given a trajectory in the real plane, the vectors that are parallel to the velocity at a moment are called tangent vectors to the trajectory at the moment , and the line passing through directed by is the tangent line to the graph of at the moment .

Definition - Tangent line to a trajectory. Given a trajectory in the real plane , the tangent line to to the graph of at is the line with equation

Example. We have seen that for the trajectory , , whose image is the unit circumference centred at the coordinate origin, the object position at the moment is and its velocity . Thus the equation of the tangent line to at that moment is

Cartesian and point-slope equations

From the vectorial equation of the tangent to a trajectory at the moment we can get the coordinate functions

and solving for and equalling both equations we get the Cartesian equation of the tangent

if and .

From this equation it is easy to get the point-slope equation of the tangent

Example. Using the vectorial equation of the tangent of the previous example

its Cartesian equation is

and the point-slope equation is

Normal line to a trajectory in the plane

We have seen that the tangent line to a trajectory at is the line passing through the point directed by the velocity vector . If we take as direction vector a vector orthogonal to , we get another line that is known as normal line to the trajectory.

Definition - Normal line to a trajectory. Given a trajectory in the real plane , the normal line to the graph of at moment is the line with equation

The Cartesian equation is

and the point-slope equation is

The normal line is always perpendicular to the tangent line as their direction vectors are orthogonal.

Example. Considering again the trajectory of the unit circumference , , the normal line to the graph of at moment is

the Cartesian equation is

and the point-slope equation is

Tangent and normal lines to a function

A particular case of tangent and normal lines to a trajectory are the tangent and normal lines to a function of one real variable. For every function , the trajectory that trace its graph is

and its velocity is

so that the tangent line to at the moment is

and the normal line is

Example. Given the function , the trajectory that traces its graph is and its velocity is . At the moment the trajectory passes through the point with a velocity . Thus, the tangent line at that moment is

and the normal line is

Tangent line to a trajectory in the space

The concept of tangent line to a trajectory can be easily extended from the real plane to the three-dimensional space .

If , , is a trajectory in the real space , then at the moment , the moving object that follows this trajectory will be at the position with a velocity . Thus, the tangent line to at this moment have the following vectorial equation

and the Cartesian equations are

provided that , y .

Example. Given the trajectory , in the real space, at the moment the trajectory passes through the point

with velocity

and the tangent line to the graph of at that moment is

Interactive Example

Interactive Example

Normal plane to a trajectory in the space

In the three-dimensional space , the normal line to a trajectory is not unique. There are an infinite number of normal lines and all of them are in the normal plane.

If , , is a trajectory in the real space , then at the moment , the moving object that follows this trajectory will be at the position with a velocity . Thus, using the velocity vector as normal vector the normal plane to at this moment have the following vectorial equation

Example. For the trajectory of the previous example , , at the moment the trajectory passes through the point

with velocity

and normal plane to the graph of at that moment is

Interactive Example

Interactive Example

Functions of several variables

A lot of problems in Geometry, Physics, Chemistry, Biology, etc. involve a variable that depend on two or more variables:

- The area of a triangle depends on two variables that are the base and height lengths.

- The volume of a perfect gas depends on two variables that are the pressure and the temperature.

- The way travelled by an object free falling depends on a lot of variables: the time, the area of the cross section of the object, the latitude and longitude of the object, the height above the sea level, the air pressure, the air temperature, the speed of wind, etc.

These dependencies are expressed with functions of several variables.

Definition - Functions of several real variables. A function of real variables or a scalar field from a set in a set , is a relation that maps any tuple into a unique element of , denoted by , that is knwon as the image of by .

- The area of a triangle is a real function of two real variables

- The volume of a perfect gas is a real function of two real variables

Graph of a function of two variables

The graph of a function of two variables is a surface in the real space where every point of the surface has coordinates , with .

Example. The function that measures the area of a triangle of base and height has the graph below.

The function has the peculiar graph below.

Level set of a scalar field

Definition - Level set Given a scalar field , the level set of is the set

that is, a set where the function takes on the constant value .

Example. Given the scalar field and the point , the level set of that includes is

that is the circumference of radius centred at the origin.

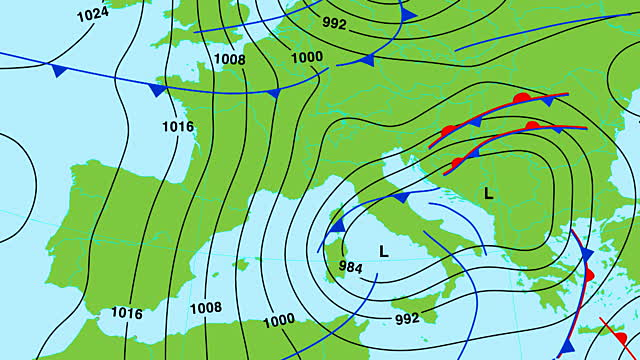

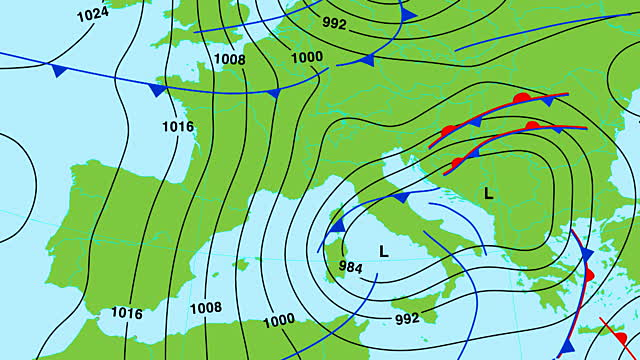

Level sets are common in applications like topographic maps, where the level curves correspond to points with the same height above the sea level,

and weather maps (isobars), where level curves correspond to points with the same atmospheric pressure.

Partial functions

Definition - Partial function. Given a scalar field , an -th partial function of is any function that results of substituting all the variables of by constants, except the -th variable, that is:

with constants.

Example. If we take the function that measures the area of a triangle

and set the value of the base to , then we the area of the triangle depends only of the height, and becomes a function of one variable, that is the partial function

Partial derivative notion

Variation of a function with respect to a variable

We can measure the variation of a scalar field with respect to each of its variables in the same way that we measured the variation of a one-variable function.

Let be a scalar field of . If we are at point and we increase the value of a quantity , then we move in the direction of the -axis from the point to the point , and the variation of the function is

Thus, the rate of change of the function with respect to along the interval is given by the quotient

Instantaneous rate of change of a scalar field with respect to a variable

If instead o measuring the rate of change in an interval, we measure the rate of change in a point, that is, when approaches 0, then we get the instantaneous rate of change that is the partial derivative with respect to .

The value of this limit, if exists, it is known as the partial derivative of with respect to the variable at the point ; it is written as

This partial derivative measures the instantaneous rate of change of at the point when moves in the -axis direction.

Geometric interpretation of partial derivatives

Geometrically, a two-variable function defines a surface. If we cut this surface with a plane of equation (that is, the plane where is the constant ) the intersection is a curve, and the partial derivative of with respect to to at is the slope of the tangent line to that curve at .

Interactive Example

Interactive Example

Partial derivative

The concept of partial derivative can be extended easily from two-variable function to -variables functions.

Definition - Partial derivative. Given a -variables function , is partially differentiable with respect to the variable at the point if exists the limit

In such a case, the value of the limit is known as partial derivative of with respect to at ; it is denoted

Remark. The definition of derivative for one-variable functions is a particular case of this definition for .

Partial derivatives computation

When we measure the variation of with respect to a variable at the point , the other variables remain constant. Thus, if we can consider the -th partial function

the partial derivative of with respect to can be computed differentiating this function:

To differentiate partially with respect to the variable , you have to differentiate as a function of the variable , considering the other variables as constants.

Example of a perfect gas. Consider the function that measures the volume of a perfect gas

where is the temperature, the pressure and and are constants.

The instantaneous rate of change of the volume with respect to the pressure is the partial derivative of with respect to . To compute this derivative we have to think in as a constant and differentiate as if the unique variable was :

In the same way, the instantaneous rate of change of the volume with respect to the temperature is the partial derivative of with respect to :

Gradient

Definition - Gradient. Given a scalar field , the gradient of , denoted by , is a function that maps every point to a vector with coordinates the partial derivatives of at ,

Later we will show that the gradient in a point is a vector with the magnitude and direction of the maximum rate of change of the function in that point. Thus, points to direction of maximum increase of at , while points to the direction of maximum decrease of at .

Example. After heating a surface, the temperature (in C) at each point (in m) of the surface is given by the function

In what direction will increase the temperature faster at point of the surface? What magnitude will the maximum increase of temperature have?

The direction of maximum increase of the temperature is given by the gradient

At point de direction is given by the vector

and its magnitude is

Composition of a vectorial field with a scalar field

Multivariate chain rule

If is a scalar field and is a vectorial function, then it is possible to compound with , so that is a one-variable function.

Theorem - Chain rule. If is a vectorial function differentiable at and is a scalar field differentiable at the point , then is differentiable at and

Example. Let us consider the scalar field and the vectorial function in the real plane, then

and

We can get the same result differentiating the composed function directly

and its derivative is

The chain rule for the composition of a vectorial function with a scalar field allow us to get the algebra of derivatives for one-variable functions easily:

To infer the derivative of the sum of two functions and , we can take the scalar field and the vectorial function . Applying the chain rule we get

To infer the derivative of the quotient of two functions and , we can take the scalar field and the vectorial function .

Tangent plane and normal line to a surface

Let be the level set of a scalar field that includes a point . If is the velocity at of a trajectory following , then

Proof

If we take the trajectory that follows the level set and passes through at time , that is , so , then

that is constant at any . Thus, applying the chain rule we have

and, particularly, at , we have

That means that the gradient of at is normal to at , provided that the gradient is not zero.

Normal and tangent line to curve in the plane

Example. Given the scalar field , and the point , the level set of that passes through , that satisfies , is the circle with radius 5 centred at the origin of coordinates. Thus, taking as a normal vector the gradient of

at the point is , and the normal line to the circle at is

On the other hand, the tangent line to the circle at is

Normal line and tangent plane to a surface in the space

Example. Given the scalar field , and the point , the level set of that passes through , that satisfies , is the paraboloid . Thus, taking as a normal vector the gradient of

at the point is , and the normal line to the paraboloid at is

On the other hand, the tangent plane to the paraboloid at is

The graph of the paraboloid and the normal line and the tangent plane to the graph of at the point are below.

Interactive Example

Interactive Example

Directional derivative

For a scalar field , we have seen that the partial derivative is the instantaneous rate of change of with respect to at point , that is, when we move along the -axis.

In the same way, is the instantaneous rate of change of with respect to at the point , that is, when we move along the -axis.

But, what happens if we move along any other direction?

The instantaneous rate of change of at the point along the direction of a unitary vector is known as directional derivative.

Definition - Directional derivative. Given a scalar field of , a point and a unitary vector in that space, we say that is differentiable at along the direction of if exists the limit

In such a case, the value of the limit is known as directional derivative of at the point along the direction of .

Theorem - Directional derivative . Given a scalar field of , a point and a unitary vector in that space, the directional derivative of at the point along the direction of can be computed as the dot product of the gradient of at and the unitary vector :

Proof

If we consider a unitary vector , the trajectory that passes through , following the direction of , has equation

For , this trajectory passes through the point with velocity .

Thus, the directional derivative of at the point along the direction of is

The partial derivatives are the directional derivatives along the vectors of the canonical basis.

Example. Given the function , its gradient is

The directional derivative of at the point , along the unit vector is

To compute the directional derivative along a non-unitary vector , we have to use the unitary vector that results from normalizing with the transformation

Geometric interpretation of the directional derivative

Geometrically, a two-variable function defines a surface. If we cut this surface with a plane of equation (that is, the vertical plane that passes through the point with the direction of vector ) the intersection is a curve, and the directional derivative of at along the direction of is the slope of the tangent line to that curve at point .

Interactive Example

Interactive Example

Growth of scalar field along the gradient

We have seen that for any vector

where is the angle between and the gradient .

Taking into account that , for any vector it is satisfied that

Furthermore, if has the same direction and sense than the gradient, we have .

Therefore, the maximum increase of a scalar field at a point is along the direction of the gradient at that point.

In the same manner, if has the same direction but opposite sense than the gradient, we have .

Therefore, the maximum decrease of a scalar field at a point is along the opposite direction of the gradient at that point.

Implicit derivation

When we have a relation , sometimes we can consider as an implicit function of , at least in a neighbourhood of a point .

The equation , whose graph is the circle of radius 5 centred at the origin of coordinates, its not a function, because if we solve the equation for , we have two images for some values of ,

However, near the point we can represent the relation as the function , and near the point we can represent the relation as the function .

If an equation defines as a implicit function of , , in a neighbourhood of , then we can compute de derivative of , , even if we do not know the explicit formula for .

Theorem - Implicit derivation. Let a two-variable function and let be a point in such that . If has partial derivatives continuous at and , then there is an open interval with and a function such that

- .

- for all .

- is differentiable on , and

Proof. To prove the last result, take the trajectory on the interval . Then

Thus, using the chain rule we have

from where we can deduce

This technique that allows us to compute in a neighbourhood of without the explicit formula of , it is known as implicit derivation.

Example. Consider the equation of the circle of radius 5 centred at the origin . It can also be written as

Take the point that satisfies the equation, .

As have partial derivatives and , that are continuous at , and , then can be expressed as a function of in a neighbourhood of and its derivative is

In this particular case, that we know the explicit formula of , we can get the same result computing the derivative as usual

The implicit function theorem can be generalized to functions with several variables.

Theorem - Implicit derivation. Let a -variables function and let be a point in such that . If has partial derivatives continuous at and , then there is a region with and a function such that

- .

- for all .

- is differentiable on , and

Second order partial derivatives

As the partial derivatives of a function are also functions of several variables we can differentiate partially each of them.

If a function has a partial derivative with respect to the variable in a set , then we can differentiate partially again with respect to the variable . This second derivative, when exists, is known as second order partial derivative of with respect to the variables and ; it is written as

In the same way we can define higher order partial derivatives.

Example. The two-variables function

has 4 second order partial derivatives:

Hessian matrix and Hessian

Definition - Hessian matrix. Given a scalar field , with second order partial derivatives at the point , the Hessian matrix of at , denoted by , is the matrix

The determinant of this matrix is known as Hessian of at ; it is denoted .

Example. Consider again the two-variables function

Its Hessian matrix is

At point is

And its Hessian is

Symmetry of second partial derivatives

In the previous example we can observe that the mixed derivatives of second order and are the same. This fact is due to the following result.

Theorem - Symmetry of second partial derivatives. If is a scalar field with second order partial derivatives and continuous at a point , then

This means that when computing a second partial derivative.

As a consequence, if the function satisfies the requirements of the theorem for all the second order partial derivatives, the Hessian matrix is symmetric.

Taylor polynomials

Linear approximation of a scalar field

In a previous chapter we saw how to approximate a one-variable function with a Taylor polynomial. This can be generalized to several-variables functions.

If is a point in the domain of a scalar field and is a vector, the first degree Taylor formula of around is

where

is the first degree Taylor polynomial of at , and is the Taylor remainder for the vector , that is the error in the approximation.

The remainder satisfies

The first degree Taylor polynomial for a function of two variables is the tangent plane to the graph of at .

Linear approximation of a two-variable function

If is a scalar field of two variables and , as for any point we can take the vector , then the first degree Taylor polynomial of at , can be written as

Example. Given the scalar field , its gradient is

and the first degree Taylor polynomial at the point is

This polynomial approximates near the point . For instance,

The graph of the scalar field and the first degree Taylor polynomial of at the point is below.

Quadratic approximation of a scalar field

If is a point in the domain of a scalar field and is a vector, the second degree Taylor formula of around is

where

is the second degree Taylor polynomial of at the point , and is the Taylor remainder for the vector , that is the error in the approximation.

The remainder satisfies

This means that the remainder is smaller than the square of the module of .

Quadratic approximation of a two-variable function

If is a scalar field of two variables and , then the second degree Taylor polynomial of at , can be written as

Example. Given the scalar field , its gradient is

its Hessian matrix is

and the second degree Taylor polynomial of at the point is

Thus,

The graph of the scalar field and the second degree Taylor polynomial of at the point is below.

Interactive Example

Interactive Example

Relative extrema

Definition - Relative extrema.

A scalar field in has a relative maximum at a point if there is a value such that

has a relative minimum at if there is a value such that

Both relative maxima and minima are known as relative extrema of .

Critical points

Theorem - Critical points. If a scalar field in has a relative maximum or minimum at a point , then is a critical or stationary point of , that is, a point where the gradient vanishes

Proof

Taking the trajectory that passes through with the direction of the gradient at that point the function does not decrease at since

and it only vanishes if .

Thus, if , can not have a relative maximum at since following the trajectory of from there are points where has an image greater than the image at . In the same way, following the trajectory of in the opposite direction there are points where has an image less than the image at , so can not have relative minimum at .

Example. Given the scalar field , it is obvious that only has a relative minimum at since

Is easy to check that has a critical point at , that is .

Saddle points

Not all the critical points of a scalar field are points where the scalar field has relative extrema. If we take, for instance, the scalar field , its gradient is

that only vanishes at . However, this point is not a relative maximum since the points in the -axis have images , nor a relative minimum since the points in the -axis have images . This type of critical points that are not relative extrema are known as saddle points.

Analysis of the relative extrema

From the second degree Taylor’s formula of a scalar field at a point we have

Thus, if is a critical point of , as , we have

Therefore, the sign of the is the sign of the second degree term .

There are four possibilities:

Thus, depending on de sign of , we have

Theorem.

Given a critical point of a scalar field , it holds that

- If is definite positive then has a relative minimum at .

- If is definite negative then has a relative maximum at .

- If is indefinite then has a saddle point at .

When is semidefinite we can not draw any conclusion and we need higher order partial derivatives to classify the critical point.

Analysis of the relative extrema of a scalar field in

In the particular case of a scalar field of two variables, we have

Theorem.

Given a critical point of a scalar field , it holds that

- If and then has a relative minimum at .

- If and then has a relative maximum at .

- IF then has a saddle point at .

Example. Given the scalar field , its gradient is

and it has critical points at , , and .

The hessian matrix is

and the hessian is

Thus, we have

The graph of the function and their relative extrema and saddle points are shown below.